Introduction

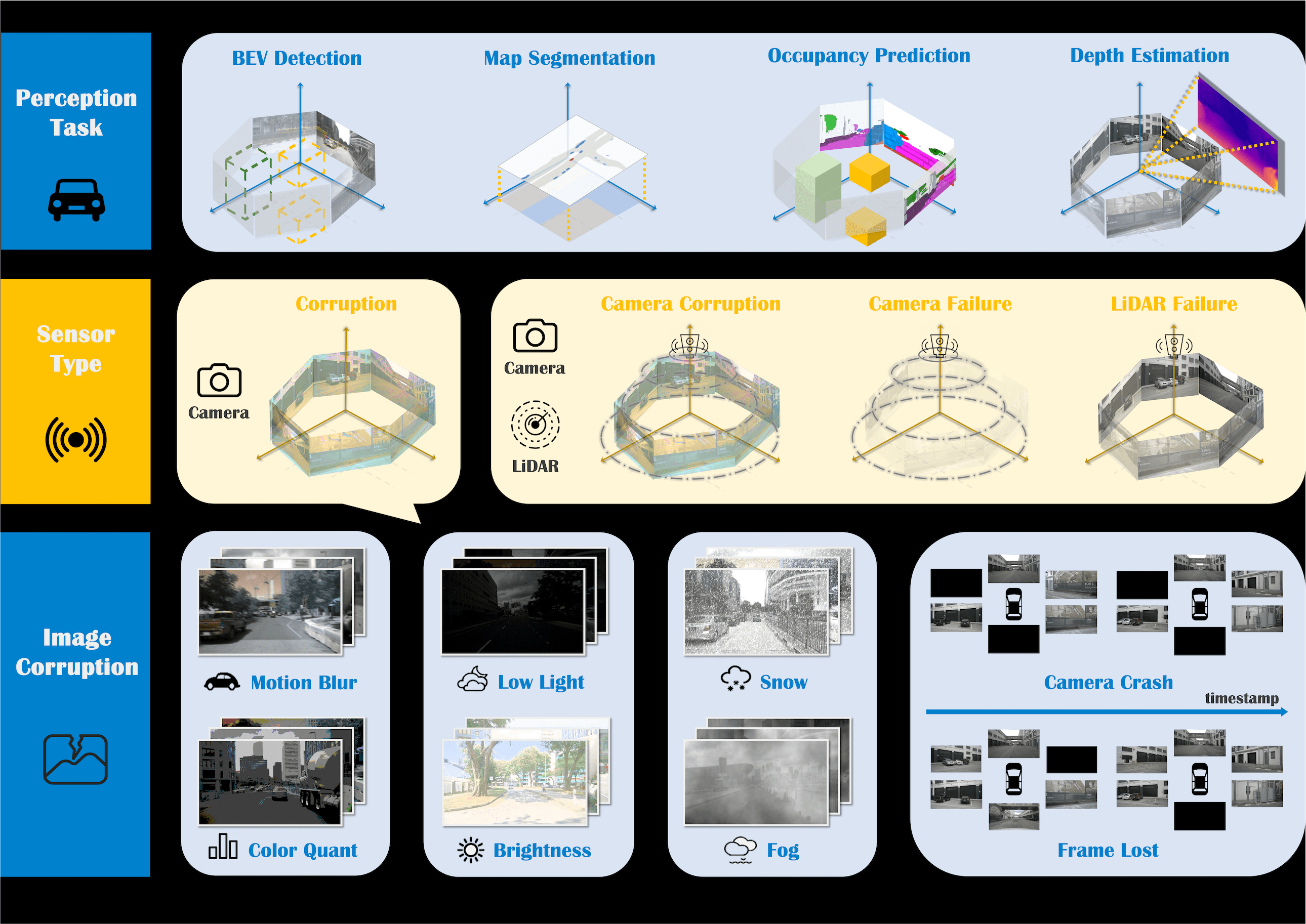

In the rapidly evolving domain of autonomous driving, the accuracy and resilience of perception systems are paramount. Recent advancements, particularly in bird's eye view (BEV) representations and LiDAR sensing technologies, have significantly improved in-vehicle 3D scene perception.

Yet, the robustness of 3D scene perception methods under varied and challenging conditions — integral to ensuring safe operations — has been insufficiently assessed. To fill in the existing gap, we introduce The RoboDrive Challenge, seeking to push the frontiers of robust autonomous driving perception.

RoboDrive is one of the first benchmarks that targeted probing the Out-of-Distribution (OoD) robustness of state-of-the-art autonomous driving perception models, centered around two mainstream topics: common corruptions and sensor failures.

Topic One: Corruptions

There are eighteen real-world corruption types in total, ranging from three perspectives:

- Weather and lighting conditions, such as bright, low-light, foggy, and snowy conditions.

- Movement and acquisition failures, such as potential blurs caused by vehicle motions.

- Data processing issues, such as noises and quantizations happen due to hardware malfunctions.

Topic Two: Sensor Failures

Additionally, we aim to probe the 3D scene perception robustness under camera and LiDAR sensor failures:

- Loss of certain camera frames during the driving system sensing process.

- Loss of one or more camera views during the driving system sensing process.

- Loss of the roof-top LiDAR view during the driving system sensing process.

Challenge Tracks

There are five tracks in this RoboDrive Challenge, with emphasis on the following 3D scene perception tasks:

- Track 1: Robust BEV Detection.

- Track 2: Robust Map Segmentation.

- Track 3: Robust Occupancy Prediction.

- Track 4: Robust Depth Estimation.

- Track 5: Robust Multi-Modal BEV Detection.

For additional implementation details, kindly refer to our RoboBEV, RoboDepth, and Robo3D projects.

E-mail: robodrive.2024@gmail.com.

Venue

The RoboDrive Challenge is affiliated with the 41st IEEE Conference on Robotics and Automation (ICRA 2024).

ICRA is IEEE Robotics and Automation Society's flagship conference. ICRA 2024 will be held from May 13th to 17th, 2024, in Yokohama, Japan.

The ICRA competitions provide a unique venue for state-of-the-art technical demonstrations from research labs throughout academia and industry. For additional details, kindly refer to the ICRA 2024 website.

RoboDrive Workshop

Our workshop is scheduled to be held on Wednesday, May 15th, 2024, from 1:00 P.M. to 5:00 P.M. JST (UTC+9).

For on-site attendance: Visit us at the Competition Hall, Pacific Convention Plaza Yokohama (PACIFICO Yokohama) 1-1-1, Minato Mirai, Nishi-ku, Yokohama 220-0012, Japan.

For online attendance: Join us using this ZOOM link.

The workshop slides can be found from here.

Toolkit

Challenge One: Corruptions

Challenge Two: Sensor Failures

Timeline

-

Team Up

Register for your team by filling in this Google Form.

-

Release of Training and Evaluation Data

Download the data from the competition toolkit.

-

Competition Servers Online @ CodaLab

-

Phase One Deadline

Shortlisted teams are invited to participate in the next phase.

-

Phase Two Deadline

Don't forget to include the code link in your submissions.

-

Award Decision Announcement

Associated with the ICRA 2024 conference formality.

Awards

1st Place

Cash $ 5000 + Certificate

- This award will be given to five awardees; an amount of $ 1000 will be given to each track..

2nd Place

Cash $ 3000 + Certificate

- This award will be given to five awardees; an amount of $ 600 will be given to each track..

3rd Place

Cash $ 2000 + Certificate

- This award will be given to five awardees; an amount of $ 400 will be given to each track.

Innovative Award

Certificate

- This award will be selected by the program committee and given to ten awardees; two per track.

Submission & Evaluation

In this competition, all participants are expected to adopt the official nuScenes dataset for model training and our robustness probing sets for model evaluation. Additional data sources are NOT allowed in this competition.

Kindly refer to DATA_PREPARE.md for the details to prepare the training and evaluation data.

For all five tracks in this RoboDrive competition, the participants are expected to submit their predictions to the EvalAI servers for model evaluation.

To facilitate the training and evaluation, we have provided detailed instructions and a baseline model for each track. Kindly refer to GET_STARTED.md for additional details.

FAQs

Please refer to Frequently Asked Questions for more detailed rules and conditions of this competition.

Contact

Organizers

Sponsors

This competition is generously supported by HUAWEI Noah's Ark Lab.

Program Committee

References

[1] S. Xie, L. Kong, W. Zhang, J. Ren, L. Pan, K. Chen, and Z. Liu. "Benchmarking and Analyzing Bird's Eye View Perception Robustness to Corruptions," Preprint, 2023. [Link] [Code]

[2] L. Kong, S. Xie, H. Hu, L. X. Ng, B. R. Cottereau, and W. T. Ooi. "RoboDepth: Robust Out-of-Distribution Depth Estimation under Corruptions," NeurIPS, 2023. [Link] [Code]

[3] L. Kong, Y. Liu, X. Li, R. Chen, W. Zhang, J. Ren, L. Pan, K. Chen, and Z. Liu. "Robo3D: Towards Robust and Reliable 3D Perception against Corruptions," ICCV, 2023. [Link] [Code]

Affiliations

This competition is hosted by Shanghai AI Laboratory.

Acknowledgements

This competition is developed based on the RoboBEV, RoboDepth, and Robo3D projects.

The evaluation sets of this competition are constructed based on the nuScenes dataset from Motional AD LLC.

Part of the content of this website is adopted from The RoboDepth Challenge @ ICRA 2023. We would like to thank Jiarun Wei and Shuai Wang for building up the template from the SeasonDepth website.

Affiliated Project

This project is affiliated with DesCartes, a CNRS@CREATE program on Intelligent Modeling for Decision-Making in Critical Urban Systems.

Winning Team

We are glad to announce the winning teams of the 2024 RoboDrive Challenge!

Track 1: Robust BEV Detection

1st: DeepVision

- Team: Xu Cao, Hao Lu, Ying-Cong Chen

- Affiliation: HKUST (Guangzhou), HKUST

2nd: Ponyville Autonauts Ltd

- Team: Caixin Kang, Xinning Zhou, Chengyang Ying, Wentao Shang, Xingxing Wei, Yinpeng Dong

- Affiliation: Beihang U., Tsinghua U., Hefei U. of Technology

3rd: CyberBEV

- Team: Bo Yang, Shengyin Jiang, Zeliang Ma, Dengyi Ji, Haiwen Li

- Affiliation: Beijing U. of Posts and Telecommunications, Beijing U. of Technology

Track 2: Robust Map Segmentation

1st: SafeDrive-SSR

- Team: Xingliang Huang, Yu Tian

- Affiliation: U. of Chinese Academy of Sciences, Tsinghua U.

2nd: CrazyFriday

- Team: Genghua Kou, Fan Jia, Yingfei Liu, Tiancai Wang, Ying Li

- Affiliation: Beijing Institute of Technology, Megvii Technology

3rd: Samsung Research

- Team: Xiaoshuai Hao, Yifan Yang, Hui Zhang, Mengchuan Wei, Yi Zhou, Haimei Zhao, Jing Zhang

- Affiliation: Samsung R&D Institute China Beijing, The U. of Sydney

Track 3: Robust Occupancy Prediction

1st: ViewFormer

- Team: Jinke Li, Xiao He, Xiaoqiang Cheng

- Affiliation: UISEE

2nd: APEC Blue

- Team: Bingyang Zhang, Lirong Zhao, Dianlei Ding, Fangsheng Liu, Yixiang Yan, Hongming Wang

- Affiliation: SongGuo7, Beijing APEC Blue Technology Co., Ltd, Beihang U.

3rd: hm.unilab

- Team: Nanfei Ye, Lun Luo, Yubo Tian, Yiwei Zuo, Zhe Cao, Yi Ren, Yunfan Li, Wenjie Liu, Xun Wu

- Affiliation: Haomo.ai

Track 4: Robust Depth Estimation

1st: HIT-AIIA

- Team: Yifan Mao, Ming Li, Jian Liu, Jiayang Liu, Zihan Qin, Cunxi Chu, Jialei Xu, Wenbo Zhao, Junjun Jiang, Xianming Liu

- Affiliation: Harbin Institute of Technology

2nd: BUAA-Trans

- Team: Ziyan Wang, Chiwei Li, Shilong Li, Chendong Yuan, Songyue Yang, Wentao Liu, Peng Chen, and Bin Zhou

- Affiliation: Beihang U.

3rd: CUSTZS

- Team: Yubo Wang, Chi Zhang, Jianhang Sun

- Affiliation: Zhongshan Institute, Changchun U. of Science and Technology

Track 5: Robust Multi-Modal BEV Detection

1st: safedrive-promax

- Team: Xiao Yang, Hai Chen, Lizhong Wang

- Affiliation: Tsinghua U.

2nd: Ponyville Autonauts Ltd

- Team: Caixin Kang, Xinning Zhou, Chengyang Ying, Wentao Shang, Xingxing Wei, Yinpeng Dong

- Affiliation: Beihang U., Tsinghua U., Hefei U. of Technology

3rd: HITSZrobodrive

- Team: Dongyi Fu, Yongchun Lin, Huitong Yang, Haoang Li, Yadan Luo, Xianjing Cheng, Yong Xu

- Affiliation: Harbin Institute of Technology (Shenzhen), Guangdong U. of Technology, HKUST (Guangzhou), The U. of Queensland

Technical Report

- Xu Cao, Hao Lu, and Ying-Cong Chen. “ Towards Robust Multi-Camera 3D Object Detection through Temporal Sequence Mix Augmentation ”, Technical Report, 2024.

- Caixin Kang, Xinning Zhou, Chengyang Ying, Wentao Shang, Xingxing Wei, and Yinpeng Dong. “ MVE: Multi-View Enhancer for Robust Bird's Eye View Object Detection ”, Technical Report, 2024.

- Bo Yang, Shengyin Jiang, Zeliang Ma, Dengyi Ji, and Haiwen Li. “ FocalAngle3D: An Angle-Enhanced Two-Stage Model for 3D Detection ”, Technical Report, 2024.

- Xingliang Huang and Yu Tian. “ Models and Data Enhancements for Robust Map Segmentation in Autonomous Driving ”, Technical Report, 2024.

- Xiaoshuai Hao, Yifan Yang, Hui Zhang, Mengchuan Wei, Yi Zhou, Haimei Zhao, and Jing Zhang. “ Using Temporal Information and Mixing-Based Data Augmentations for Robust HD Map Construction ”, Technical Report, 2024.

- Genghua Kou, Fan Jia, Yingfei Liu, Tiancai Wang, and Ying Li. “ MultiViewRobust: Scaling Up Pretrained Models for Robust Map Segmentation ”, Technical Report, 2024.

Jinke Li, Xiao He, and Xiaoqiang Cheng. “ ViewFormer: Spatiotemporal Modeling for Robust Occupancy Prediction ”, Technical Report, 2024.

- Bingyang Zhang, Lirong Zhao, Dianlei Ding, Fangsheng Liu, Yixiang Yan, and Hongming Wang. “ Robust Occupancy Prediction based on Enhanced SurroundOcc ”, Technical Report, 2024.

- Nanfei Ye, Lun Luo, Xun Wu, Yubo Tian, Zhe Cao, Yunfan Li, Yiwei Zuo, Wenjie Liu, and Yi Ren. “ Improving Out-of-Distribution Robustness of Occupancy Prediction Networks with Advanced Loss Functions ”, Technical Report, 2024.

- Yifan Mao, Ming Li, Jian Liu, Jiayang Liu, Zihan Qin, Chunxi Chu, Jialei Xu, Wenbo Zhao, Junjun Jiang, and Xianming Liu. “ DINO-SD for Robust Multi-View Supervised Depth Estimation ”, Technical Report, 2024.

- Ziyan Wang, Chiwei Li, Shilong Li, Chendong Yuan, Songyue Yang, Wentao Liu, Peng Chen, and Bin Zhou. “ Fusing Features Across Scales: A Semi-Supervised Attention-Based Approach for Robust Depth Estimation ”, Technical Report, 2024.

- Yubo Wang, Chi Zhang, and Jianhang Sun. “ SD-ViT: Performance and Robustness Enhancements of MonoViT for Multi-View Depth Estimation ”, Technical Report, 2024.

- Hai Chen, Xiao Yang, and Lizhong Wang. “ ASF: Robust 3D Object Detection by Solving Sensor Failures ”, Technical Report, 2024.

- Caixin Kang, Xinning Zhou, Chengyang Ying, Wentao Shang, Xingxing Wei, and Yinpeng Dong. “ Cross-Modal Transformers for Robust Multi-Modal BEV Detection ”, Technical Report, 2024.

- Dongyi Fu, Yongchun Lin, Huitong Yang, Haoang Li, Yadan Luo, Xianjing Cheng, and Yong Xu. “ RobuAlign: Robust Alignment in Multi-Modal 3D Object Detection ”, Technical Report, 2024.

Terms & Conditions

This competition is made freely available to academic and non-academic entities for non-commercial purposes such as academic research, teaching, scientific publications, or personal experimentation. Permission is granted to use the data given that you agree:

1. That the data in this competition comes “AS IS”, without express or implied warranty. Although every effort has been made to ensure accuracy, we do not accept any responsibility for errors or omissions.

2. That you may not use the data in this competition or any derivative work for commercial purposes as, for example, licensing or selling the data, or using the data with a purpose to procure a commercial gain.

3. That you include a reference to RoboDrive (including the benchmark data and the specially generated data for academic challenges) in any work that makes use of the benchmark. For research papers, please cite our preferred publications as listed on our webpage.

To ensure a fair comparison among all participants, we require:

1. All participants must follow the exact same data configuration when training and evaluating their algorithms. Please do not use any public or private datasets other than those specified for model training.

2. The theme of this competition is to probe the out-of-distribution robustness of autonomous driving perception models. Theorefore, any use of the corruption and sensor failure types designed in this benchmark is strictly prohibited, including any atomic operation that is comprising any one of the mentioned corruptions.

3. To ensure the above two rules are followed, each participant is requested to submit the code with reproducible results before the final result is announced; the code is for examination purposes only and we will manually verify the training and evaluation of each participant's model.